共计 1552 个字符,预计需要花费 4 分钟才能阅读完成。

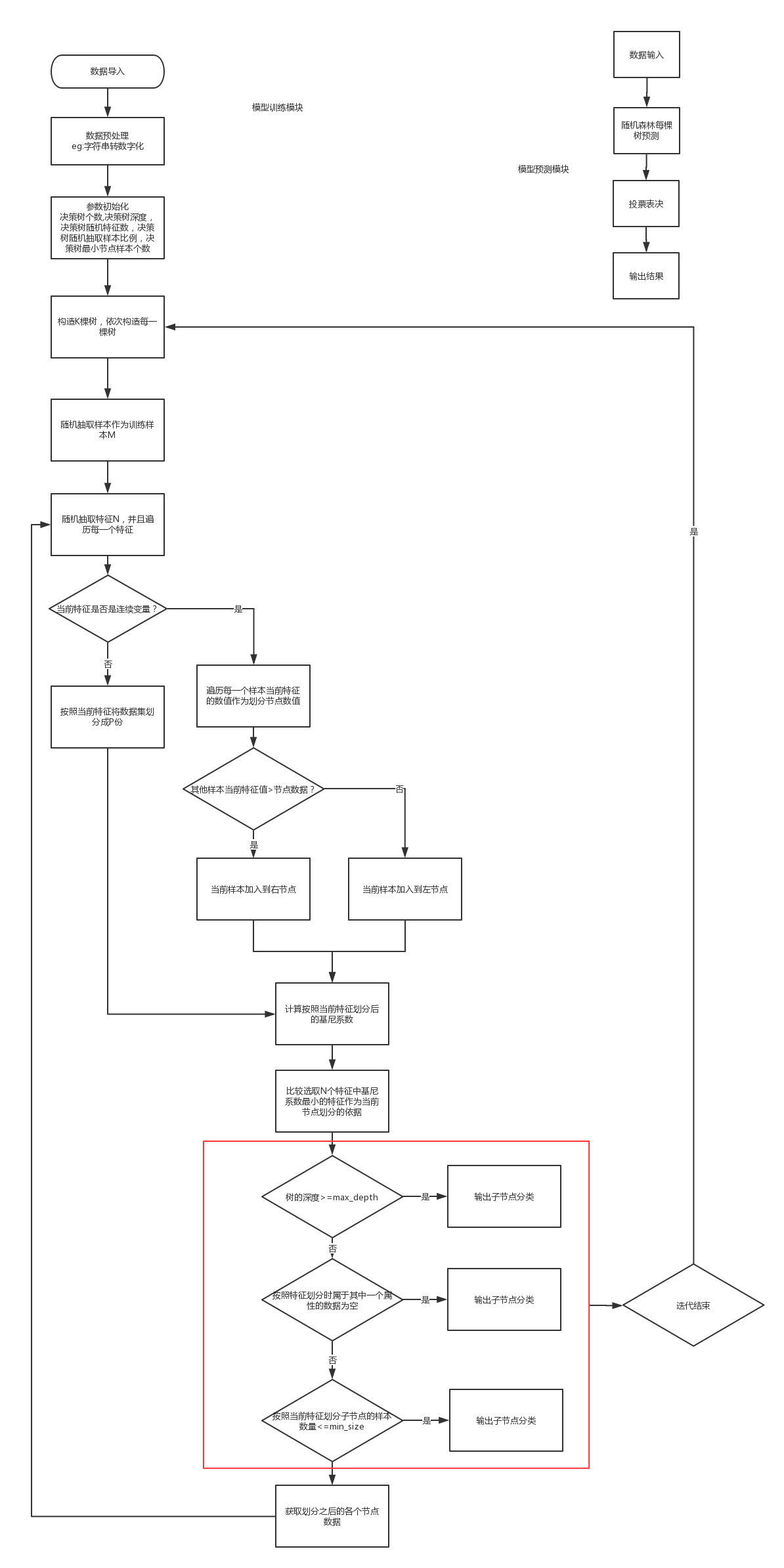

博主尝试对一个数据集使用构建一个二分类随机森林,所有特征都是连续属性,也尝试画一下随机森林的流程图

代码

# -*- coding: utf-8 -*-

#-------------------

#@Author: cuijian

#-------------------

import pandas as pd

import numpy as np

from random import randrange

from math import floor,sqrt

from sklearn.preprocessing import LabelEncoder

from sklearn.cross_validation import train_test_split

class randomforest(object):

'''

随机森林模型

'''

def load_data(self,filename):

'''

加载当前测试数据,来自开源sonar数据

filename 文件的路径+文件的名字

'''

data=pd.read_csv(filename,header=None)

target=data.iloc[:,-1]

self.target=LabelEncoder().fit_transform(list(target))

self.data=data.iloc[:,0:data.shape[1]-2]

def subsample(self,ratio):

'''

ratio是划分训练样本和测试样本的比例

example:0.7->70%正样本 30%负样本

'''

Traindata,Testdata,Traintarget,Testtarget=train_test_split(self.data,self.target,test_size=ratio, random_state=0)

return Testdata,Testtarget,Traindata,Traintarget

def buildtree(self,traindata,traintarget,max_depth,min_size, n_features):

'''

构建决策树

max_depth 树的最大深度

min_size 树的大小

n_features 随机采样特征的数量

'''

root=self.get_root(traindata,traintarget,n_features)

self.construct_tree(root,traindata,traintarget,max_depth,min_size,n_features,1)

return root

def node_end(self,group,target):

'''

树节点终止处理,输出预测分类

'''

return max(set(target[group]),key=lambda x:list(target[group]).count(x))

def construct_tree(self,node,data,target,max_depth,min_size, n_features,depth):

leftgroup,rightgroup=node['groups']

del(node['groups'])

if not leftgroup or not right:

node['left'] = node['right'] = self.node_end(leftgroup + rightgroup,target)

return

if depth >= max_depth:

node['left'], node['right'] = self.node_end(leftgroup,target), self.node_end(rightgroup,target)

return

if len(leftgroup) <= min_size:

node['left'] = self.node_end(leftgroup,target)

else:

node['left'] = self.get_root(data.iloc[leftgroup,:],target[leftgroup],n_features)

self.construct_tree(node['left'],data.iloc[leftgroup,:],target[leftgroup], max_depth, min_size, n_features, depth+1)

if len(rightgroup) <= min_size:

node['right'] = self.node_end(rightgroup,target)

else:

node['right'] = self.get_root(data.iloc[rightgroup,:],target[rightgroup],n_features)

self.construct_tree(node['right'],data.iloc[rightgroup,:],target[rightgroup], max_depth, min_size, n_features, depth+1)

def get_root(self,traindata,traintarget,n_features):

'''

获取每棵决策树的节点

traindata 训练数据

'''

b_index, b_value, b_score, b_groups = 999, 999, 999, None

features = list()

while len(features) < n_features:

index =randrange(traindata.shape[1])

if index not in features:

features.append(index)

for index in features:

for rows in range(traindata.shape[0]):

groups =self.groups_split(index, traindata.iloc[rows,index], traindata)

gini = self.giniscore(groups, traintarget)

if gini < b_score:

b_index, b_value, b_score, b_groups = index,traindata.iloc[rows,index], gini, groups

return {'index':b_index, 'value':b_value, 'groups':b_groups}

def groups_split(self,index,nodeValue,data):

'''

根据当前所选属性,通过比较其他数据,将数据集划分为两个子集

index 特征属性索引值

nodeValue 选取样本节点对应属性特征的数据值

data 原始训练数据

返回值:

leftgroup,rightgroup 记录根据当期属性分类之后的样本的数字索引值,leftgroup为左节点,rightgroup右节点

'''

leftgroup,rightgroup=list(),list()

datalength=data.shape[0]

for row in range(datalength):

if data.iloc[row,index]正文完

请博主喝杯咖啡吧!